| Tags: | |

|---|---|

| Reference: | citeseer |

| Download: |

Fast Exact Inference with a Factored Model for Natural Language Parsing

Klein and Manning describe a novel model for parsing that combines two

different encodings for the parse tree: a simple PCFG, and a

dependency structure.

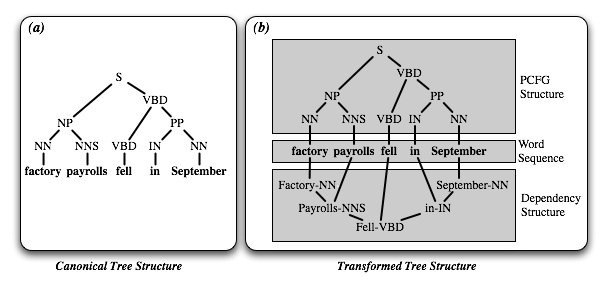

These two encodings are modelled independently, and then their

probabilities are combined by simple multiplication. In other words,

if  is a tree, and

is a tree, and  and

and  are encoding

functions mapping trees to PCFGs and dependency structures

respectively, then Klein and Manning model the probability of a tree

are encoding

functions mapping trees to PCFGs and dependency structures

respectively, then Klein and Manning model the probability of a tree

as:

as:

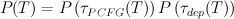

This decomposition is consistent with the common psycholinguistic belief that syntax and semantics are two relatively decoupled modules, with syntax responsible for constraining the set of acceptable structural configurations independent of individual lexical items, and semantics responsible for resolving ambiguities. The figure below illustrates how this factored model can be represented as an output encoding transformation.

Klein & Manning's Factored Model as a Tree

Transformation. Klein and Manning's factored parsing model

can be thought of as a tree transformation that

replaces the canonical structure (a) with a new structure

(b) that describes the sentence's structure using two

separate (disconnected) pieces: one describing the sentence's PCFG

structure, and the other describing its dependency structure.

can be thought of as a tree transformation that

replaces the canonical structure (a) with a new structure

(b) that describes the sentence's structure using two

separate (disconnected) pieces: one describing the sentence's PCFG

structure, and the other describing its dependency structure.

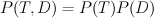

As Klein and Manning point out, this decomposition assigns probability

mass to invalid output structures. In particular, since the two

sub-models are entirely independent, there is nothing to prevent them

from building structures with different terminal strings. Klein and

Manning suggest that this problem could be alleviated by discarding

all inconsistent outputs, and re-normalizing the remaining

probabilities to sum to one. However, a more principled solution

might be switching from generative models to conditional models. In

particular, the above equation could be

replaced by the following conditional variant, where  is the input

sentence:

is the input

sentence:

Since both models are conditioned on  , they can no longer generate

incompatible terminal strings. [1]

, they can no longer generate

incompatible terminal strings. [1]

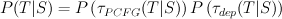

Using their factored model, Klein and Manning show that it is possible to perform efficient exact search using an A* parser. The A* algorithm provides guidance to a search problem by making use of an estimate of the cost of completing a given search path. If this estimate provides a lower bound on the cost, then the A* algorithm is guaranteed to find the optimal search path. In the context of bottom-up parsing, search paths correspond to phrase structures, and the cost of completing a search path is inversely related to the maximal "outside probability" of a given phrase structure:

Because the two factored models proposed by Klein and Manning are individually relatively simple, it is possible to calculate the outside probability for these individual models analytically. These two outside probabilities can then be combined to form an estimate of the outside probability in the joint model by simply multiplying them:

Using this estimate for the outside probability, Klein and Manning show that an A* parser using their factored model performs comparably to existing lexicalized parsers that use a joint model to learn lexical and structural preferences.

| [1] | This move to conditional models solves the problem of incompatible terminal strings, but applying the two models independently may still generate incompatible structures. In particular, dependency structures impose constraints on the set of possible phrase bracketings; and those constraints are not always compatible with all possible PCFG trees. This issue could be addressed by the renormalization trick proposed by Klein and Manning, or by adding a limited set of dependencies between the two models. |