Discriminative Learning over Constrained Latent Representations

Focus: Binary classification tasks that require an intermediate representation

General recipe for NLP problems: "Learning over Constrained Latent Representations" (LCLR)

Example domains: transliteration,

Example task: paraphrase identification. Given two sentences, are they a paraphrase of each other? It's a binary decision, but we need a complex intermediate representation. In this case, we use an alignment.

The intermediate representation is a latent representation that allows us to justify a positive (negative) label.

Prior Work

Two-stage approach:

- Generate an intermediate representation (fixed)

- Extract features based on the intermediate representation

- Use those features for learning

Problem: the intermediate representation doesn't know about the binary task; joint model would potentially give us a better intermediate rep

LCLR

- Jointly learns the intermediate representation and the labels

- Constraint-based inference for the intermediate representation

- Uses Integer Linear Programming on latent variables

What we want from the intermediate representation:

- Only positive examples have good intermediate representations.

- No negative example has a good intermediate representation.

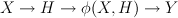

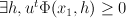

For positive example  :

:

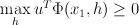

For negative example  :

:

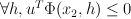

So the prediction function is: